ISO/IEC 42001:2023 – The World’s First AI Management System Standard

Introducing ISO/IEC 42001:2023: Managing AI Systems with Confidence

Artificial Intelligence (AI) is now deeply embedded in daily life, from healthcare diagnostics and financial risk models to self-driving vehicles and personalized online services. The opportunities are enormous, but so are the risks. Organizations adopting AI face questions about ethics, transparency, and bias.

To address these challenges, ISO and IEC jointly published ISO/IEC 42001:2023, the world’s first AI Management System Standard (AIMS). Released in late 2023, the standard gives organizations a structured way to develop, use, and monitor AI systems responsibly. It builds on the idea that AI is not just a technical tool, but something that needs governance at the organizational level.

Artificial Intelligence has moved from being a futuristic idea to a business-critical technology. ISO/IEC 42001:2023 is the first international standard that gives organizations a structured way to manage AI responsibly

What is ISO/IEC 42001:2023?

ISO/IEC 42001:2023 is a management system standard specifically for Artificial Intelligence. Like ISO 9001 for quality or ISO/IEC 27001 for information security, it defines requirements for establishing, implementing, maintaining, and continually improving an AI Management System (AIMS).

It applies to organizations of any size and sector that design, develop, deploy, or use AI systems. The standard is not limited to technology companies, it also covers industries such as healthcare, finance, transport, education, and government, where AI is increasingly used in critical decision-making.

ISO/IEC 42001:2023 provides a management system framework, similar to ISO 9001 or ISO/IEC 27001. It helps organizations implement AI responsibly, ensuring that risk assessment and ethical use are built into their operations.

This standard addresses key issues such as:

- Governance of AI model development

- Risk and impact assessments for AI use cases

- AI system transparency, explainability, and fairness

- Data quality and bias mitigation

- Monitoring and post-deployment control

- Compliance with AI-related laws and regulations

For organizations ready to establish trust in their AI systems, Pacific Certifications offers audit and certification services aligned with ISO/IEC 42001:2023. Reach us at [email protected].

Key Clauses of ISO/IEC 42001:2023

Clause | Focus | Application in AI |

|---|---|---|

Context of the Organization | Understanding internal and external factors | Identifying how AI impacts stakeholders and society. |

Leadership | Management responsibility | Assigning roles for AI governance and accountability. |

Planning | Risk and opportunity management | Assessing AI risks, bias, fairness, and regulatory requirements. |

Support | Resources, competence, and awareness | Ensuring skilled staff, ethical training, and data governance. |

Operation | Implementation of processes | Developing, testing, and monitoring AI systems. |

Performance Evaluation | Monitoring and measurement | Auditing AI models for accuracy, fairness, and security. |

Improvement | Corrective actions and continual improvement | Updating systems to reflect new risks, regulations, and technologies. |

Why ISO/IEC 42001:2023 Is a Game-Changer for Ethical AI Development?

In recent years, the world has witnessed growing concerns around AI ethics, bias, misuse, and opacity. From biased facial recognition systems to opaque generative models, public trust in AI is eroding. ISO/IEC 42001:2023 steps in as a governance blueprint for ethical AI.

The standard introduces principles of ethical design and development, requiring organizations to:

- Define ethical objectives and stakeholder expectations

- Assess risks such as discrimination, misinformation, or unintended consequences

- Ensure diversity and inclusivity in data and model design

- Establish internal oversight and accountability mechanisms

By aligning internal AI practices with ISO/IEC 42001:2023, organizations can move beyond vague ethical promises and instead demonstrate certifiable, auditable proof that their AI is developed and used responsibly.

This will become increasingly critical as AI laws like the EU AI Act, the U.S. AI Bill of Rights, and India’s Digital Personal Data Protection Act gain traction globally.

Pacific Certifications helps enterprises and AI innovators operationalize AI ethics with ISO/IEC 42001-compliant governance systems. Contact us to learn more at [email protected]!

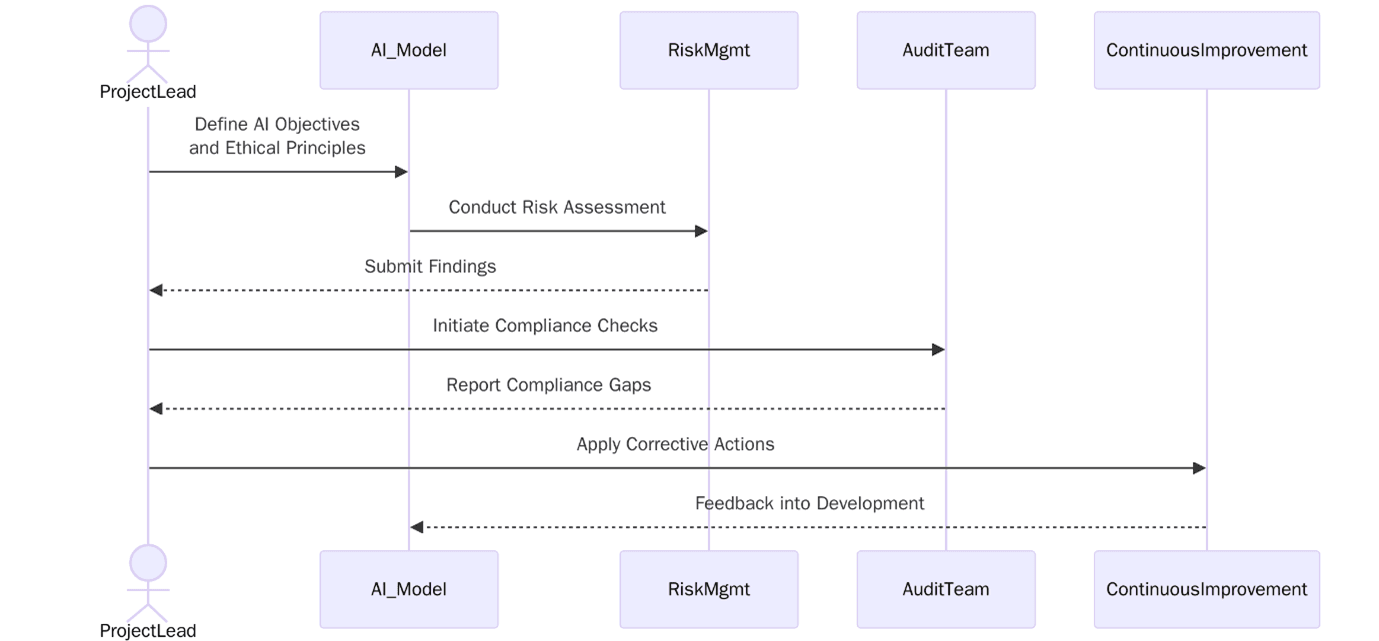

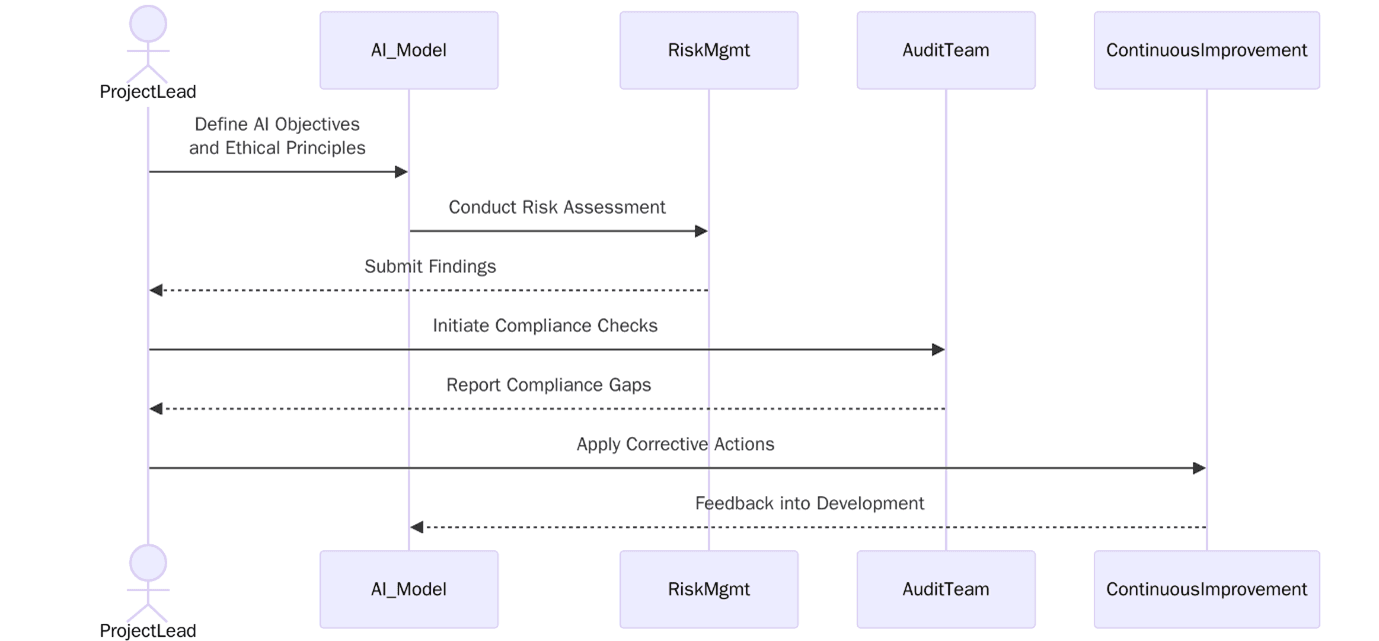

How to Implement ISO/IEC 42001:2023 in Your AI or ML Projects?

Implementing ISO/IEC 42001 requires a methodical approach, especially in organizations already deploying or experimenting with AI/ML systems.

Here’s how to get started:

- Defining the scope of AI activities — such as model development, data management, or third-party use.

- Establishing policies on fairness, transparency, security, and accountability.

- Conducting risk assessments for bias, discrimination, misinformation, or unintended harm.

- Documenting AI lifecycle processes — from data collection and model training to deployment and monitoring.

- Ensuring data quality and traceability, with clear ownership and audit trails.

- Training teams on ethical AI principles and relevant legal requirements.

- Monitoring AI systems for accuracy, fairness, robustness, and unintended outcomes.

- Performing internal audits and management reviews of the AIMS.

Tip: Start small. Organizations should begin with a risk-based gap analysis comparing their current AI practices to the requirements of ISO/IEC 42001. From there, they can align existing data governance and IT security policies with the new AI-specific controls before moving to full certification.

For AI and ML teams already operating within ISO/IEC 27001 or ISO 9001 environments, ISO/IEC 42001 integrates well as part of a broader integrated management system.

ISO/IEC 42001 vs ISO/IEC 27001: Security and Governance in AI

Although ISO/IEC 27001 is the cornerstone standard for information security management, it does not fully cover the unique risks of AI systems, such as model hallucination, data drift or algorithmic opacity.

Here’s how they differ and complement each other:

- ISO/IEC 27001 focuses on protecting information assets—ensuring confidentiality, integrity, and availability of data and systems.

- ISO/IEC 42001 focuses on managing AI-specific risks and ethical obligations—including fairness, transparency, and lawful use of AI systems.

Together, these standards provide a holistic framework for organizations that operate in AI-heavy environments:

- ISO/IEC 27001 ensures the security of the infrastructure and data.

- ISO/IEC 42001 ensures the responsible, ethical, and effective use of AI built on that infrastructure.

Forward-looking organizations are already seeking dual certification to gain marketing advantage and reduce exposure to AI governance failures.

Pacific Certifications offers bundled ISO/IEC 27001:2023 and ISO/IEC 42001 certification audits. For integrated management planning, contact [email protected].

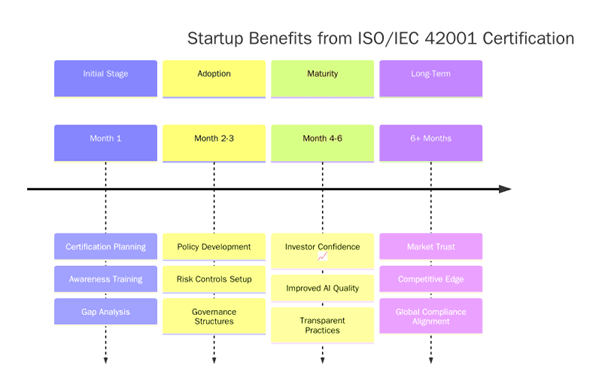

What are the benefits of ISO/IEC 42001:2023 Certification for AI-Based Startups?

Startups operating in AI are often focused on rapid growth and innovation, but failing to build governance early can lead to regulatory noncompliance, data misuse, or PR disasters. ISO/IEC 42001 provides a scalable, structured governance model tailored to fast-moving tech teams.

Here are the key benefits of certification for AI startups:

- Builds trust with investors and clients: Certification demonstrates commitment to responsible innovation and risk-aware product development.

- Simplifies compliance: Supports adherence to emerging AI regulations (EU AI Act, CCPA, GDPR) and data protection requirements.

- Attracts enterprise buyers: Many large organizations now require vendors to show alignment with AI risk management frameworks.

- Prepares for scale: Helps startups develop audit-ready systems and processes that will support sustainable growth.

- Improves product quality and model robustness: Encourages cross-functional collaboration between technical, legal, and ethical teams.

The AI market is projected to exceed USD 1.5 trillion by 2030, with applications expanding across every major sector. At the same time, regulators are moving quickly: the EU AI Act is expected to come into force in 2026, and similar frameworks are under discussion in the U.S., Canada, and Asia.

Public trust remains fragile. Surveys show that over 60% of consumers are concerned about bias or misuse of AI, and businesses deploying AI are under pressure to prove transparency. Standards like ISO/IEC 42001:2023 directly address this gap, giving organizations a credible way to show accountability.

For industries like healthcare, finance, and public services — where AI decisions carry high stakes, certification could soon become a differentiator or even a contractual requirement.

ISO/IEC 42001 – A Strategic Foundation for Responsible AI

As AI systems become more powerful, their risks become more profound. From hallucinating LLMs to biased credit scoring engines, the consequences of unmanaged AI are real, and growing. ISO/IEC 42001:2023 is the world’s first answer to this challenge, offering a globally recognized framework for responsible, ethical, and auditable AI governance.

Whether you’re an enterprise using AI in critical operations, or a startup building tomorrow’s breakthrough models, ISO/IEC 42001 helps you ensure that your AI works in alignment with people, policies, and purpose.

We, Pacific Certifications, an accredited certification body, offers end-to-end support for organizations seeking ISO/IEC 42001 audit and certification, we provide everything you need to comply with an AI management system with confidence.

How Pacific Certifications Can Help?

At Pacific Certifications, we provide independent certification and auditing services for ISO/IEC 42001:2023. We work with organizations of all sizes, from tech start-ups developing AI products to large enterprises integrating AI into business processes.

With Pacific Certifications, you can:

- Get certified to ISO/IEC 42001:2023 as proof of responsible AI management.

- Align with ethical, legal, and regulatory expectations in AI governance.

- Build stronger trust with customers, partners, and regulators.

- Rely on accredited certification recognized worldwide.

Start your certification process today, email us at [email protected] or visit www.pacificcert.com to learn more!

Ready to get ISO/IEC 42001:2023 certified?

Contact Pacific Certifications to begin your certification journey today!

Author: Sony

Suggested Certifications:

Read more: Pacific Blogs